Earlier this week, my brand new Nvidia GeForce RTX 4070Ti Super arrived. Having tested out ChatGPT, GitHub Copilot, and JetBrains AI in recently, I began to wonder if I could self-host such a service locally, to address privacy concerns and of course to save a few bucks 😊. I soon discovered ollama, a tool that allows you to run various open-source large language models on your locally. Installation on Linux (or Windows Subsystem for Linux in my case) is simple:

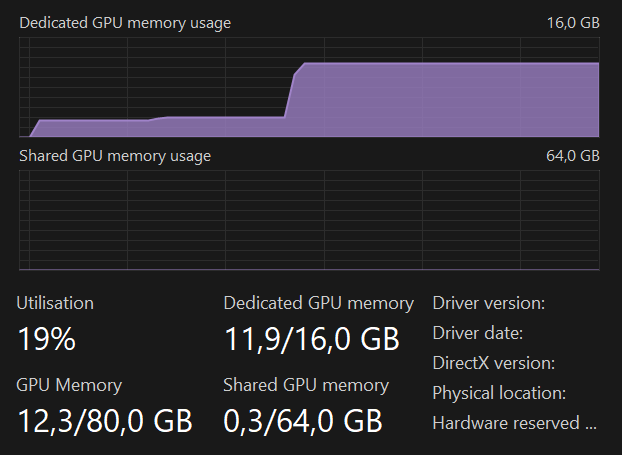

evert@wsl-debian $ curl https://ollama.ai/install.sh | shNext we have to decide which model and what parameter count we want to run. I decided on the standard codellama model (there is also a variant finetuned to Python), with a parameter count of 13 billion as that is the largest model that fits in the 16GB of VRAM the 4070 Ti Super is equipped with. Running the model through ollama automatically pulls the necessary data:

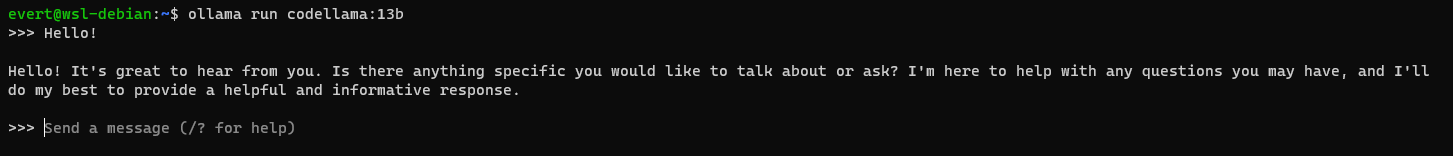

evert@wsl-debian $ ollama run codellama:13bIf everything went smoothly, you should see a prompt akin to the one shown below:

Apart from installing the latest Nvidia drivers and WSL kernel I didn’t have to do anything to get ollama to recognize my GPU.

The next step was finding a plugin capable of exposing the model within my IDE. I opted for Continue, as it supports my preferred IDEs—the JetBrains suite and Visual Studio Code. After installation, I removed the default GPT3.5 and GPT4 models from the settings and substituted them with our local model:

{

"models": [

{

"title": "Code Llama (13b)",

"provider": "ollama",

"model": "codellama-13b"

}

],

...

}By using the ‘ollama’ provider the plugin knows the model is available at port 11434. You can verify this yourself by going to that port through a browser: it should show you the message ‘Ollama is running’. Because WSL automatically forwards ports, you can use the model directly from your IDEs running under Windows.

In my limited testing (generating unit tests for Django), the codellama model seemed to be better aware of the context with regards to my models, compared to the JetBrains AI Assistant. It’s worth noting though that the JetBrains AI Assistant uses different LLMs (I assume) depending on availability, so your experience may be different.